Eight ways AI can ruin your weekend & possibly your life

Can AI kill you? Maybe, but there's a plenty of other bad things it can do to you first.

The Terminator driving a Tesla. Source: Midjourney

There's been a whole lot of news lately about the nastiness advanced artificial intelligence might inflict upon us puny humans.

Just yesterday, the New York Times published a story about Geoffrey Hinton, whom some people have called 'The Godfather of AI.' [1] Hinton is the latest in a series of AI researchers who've publicly declared their fears and/or regret about the monster they've created.

Hinton is a pioneer in the concept of 'neural networks' -- complex computer systems that learn by teaching themselves how to 'think.' He just quit his job at Google so he could speak freely about the potential danger AI poses to humanity.

The NYT's Cade Metz visited Hinton at his home in Toronto to interview him. Here's part of what he had to say:

Down the road, he is worried that future versions of the technology pose a threat to humanity because they often learn unexpected behavior from the vast amounts of data they analyze. This becomes an issue, he said, as individuals and companies allow A.I. systems not only to generate their own computer code but actually run that code on their own. And he fears a day when truly autonomous weapons — those killer robots — become reality.

“The idea that this stuff could actually get smarter than people — a few people believed that,” he said. “But most people thought it was way off. And I thought it was way off. I thought it was 30 to 50 years or even longer away. Obviously, I no longer think that.”

My difficulty with people who warn us about AI doomsday is that they're all kind of vague. That AI could possess "Godlike intelligence," is a common refrain. [2] But what does that mean, exactly?

The real problem with AI is not AI. The problem is how human beings use it. More to point: What decisions are driven by these algorithms? And once these decision-making systems are set in motion, can they ever be stopped?

Here, just in time to ruin your weekend [3], are some actual bad things that may happen to you as a result of algorithms run amok, in ascending order of awfulness.

(It's important to note that most of these things already happen without any help from AI. The technology just makes them much easier to do at scale, and makes it much harder to hold humans accountable for them.)

1. You get spoon fed more bullshit

As if well-funded corporate propaganda networks weren't already bad enough, there's this: AI being used to deliberately churn out misinformation. NewsGuard, an independent service that uses trained journalists to evaluate news sites, has identified nearly 50 fake news websites that appear to be written almost entirely by AI.

2. Your creative work gets stolen

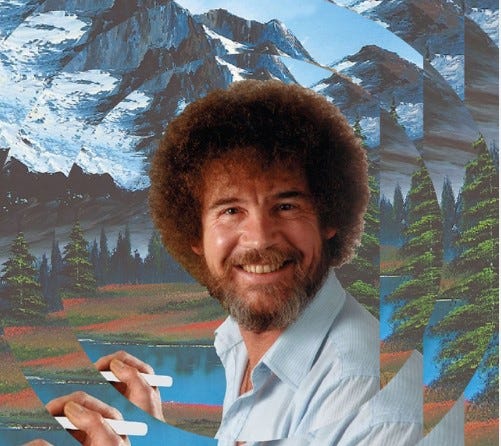

Image-generating tools like DALL-E, Midjourney, and Stable Diffusion are really amazing in the kind of art they can produce. (I use them extensively for this substack.) But they had to learn how to draw somewhere, and it wasn't by watching Bob Ross on PBS. [4] These engines were trained using existing art, but without compensating the original human artists for their use. So the artists are now suing the operators of these inanimate art thieves.

Bob Ross may be gone, but his hair lives on.

3. You accidentally spill company secrets

Samsung just banned employees from using tools like ChatGPT and Google Bard. So have JP Morgan, Bank of America, and Citigroup. Why? Because whatever you share with ChatGPT et al is automatically used to further train the AI, and could show up in the wild just about anywhere.

It's sort of like asking for relationship advice from Gossip Girl. Before the day is done, your dirty little secrets will be spread all over town.

4. Your medical records get buttered all over the Interwebs

Epic, the 800-pound gorilla of electronic medical record storage, is now sharing patient records with Microsoft and OpenAI's latest large language model, GPT-4. The reason? To help docs create auto replies to patients, and of course, to help massive for-profit healthcare providers to cut costs. [5]

These records are aggregated and "anonymized," but that doesn't mean somebody can't put match your medical data to publically available information to figure out who you are. In fact, researchers have devised algorithms that can re-identify you from "anonymous" data.

5. You get arrested

AI-driven "predictive policing" software is now used by law enforcement agencies in half a dozen states to identify "crime hotspots." How did they train these systems? By using existing criminal records.

Three guesses as to whose neighborhoods are typically designated "crime hotspots." Racist garbage in, racist garbage out.

The precogs would like a word with you. Source: Minority Report

6. Your self-driving car kills you

Teslas running on auto-pilot have been involved in roughly 300 crashes, with 31 fatalities verified so far. (You know things are bad when there's a website called Tesladeaths devoted to the number of people your products have killed.) In February, Tesla recalled 362,758 vehicles due to problems with the self-driving beta -- like, for example, its habit of chasing parked fire trucks. [6]

7. Your friendly totalitarian government tracks your every move

To me, the scariest thing about AI is how it can be used for mass surveillance. China has helpfully provided an excellent example of how 'AI-tocracy' works at scale. Per a July 2020 report from Voice of America:

An artificial intelligence (AI) institute in Hefei, in China’s Anhui province, says it has developed software that can gauge the loyalty of Communist Party members... The institute posted a video [boasting] about its “mind-reading” software, which it said would be used on party members to “further solidify their determination to be grateful to the party, listen to the party and follow the party.”

And if you think this can't happen in 'Murica, I suggest you take a closer look at some of the people running for (or already in) office.

8. Robots run amok

This is the scenario most beloved by Hollywood: Robotic killing machines attempting to exterminate humanity (or just using our bodies' electrical activity as an energy source). Whether you subscribe to The Terminator or The Matrix doomsday scenarios, it's not a pretty picture.

Fact is, the military is already using AI. Former Secretary of Defense Ash Carter issued his own strongly worded warning about that back in 2017, which I wrote a bit about here. Carter says the US will never allow AI to operate weapons of lethal force without human supervision. Let's hope his successors (and his counterparts in China, Russia, Iran, North Korea, etc) agree.

Have a great weekend, everybody.

So that was cheery, wasn't it? Post less gloomy thoughts and/or photos of adorable puppies below.

[1] Take the Skynet, leave the cannoli.

[2] Let's be honest for a second here. Has God really done that good a job with humanity? I think there's clear room for improvement.

[3] Happy Cinco de Mayo, Kentucky Derby, and NBA Playoffs to you, too.

[4] The website 538 analyzed every Bob Ross painting. More than 90% of them contain at least one tree, 56% deciduous and 53% conifers. Isn't your life just a little more complete knowing that?

[5] Tip of the cap to Doc Gurley for sharing this info.

[6] In other words, "Elon, take the wheel" is probably not the best strategy for getting home safely.

How do you even know who/what to believe when writing about this?