AI everywhere: What could possible go worng?

Large AI models can do things that even surprise the people who designed them. Nobody knows what new behaviors will emerge.

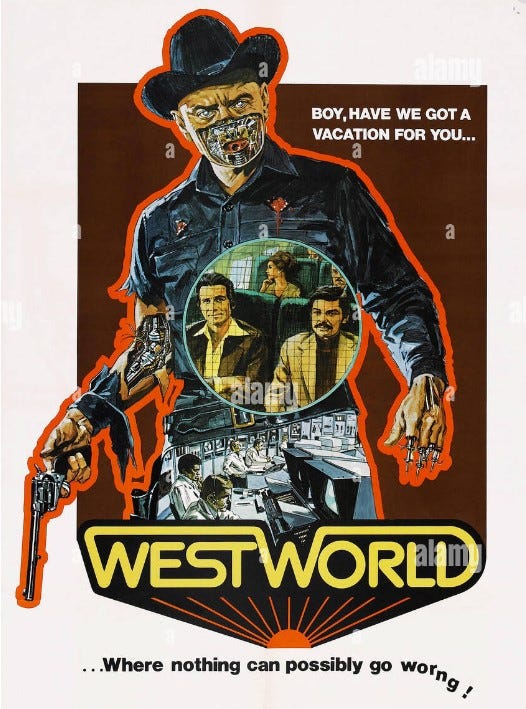

I kind of miss Yul Brynner (though not his evil twin robot). Source: Alamy.

Yuval Harari is worried about AI.

In an essay for the New York Times, the historian and author of Sapiens [1] frets over what will happen to humanity when machines are writing our stories for us:

What would it mean for humans to live in a world where a large percentage of stories, melodies, images, laws, policies and tools are shaped by nonhuman intelligence, which knows how to exploit with superhuman efficiency the weaknesses, biases and addictions of the human mind ... simply by gaining mastery of language, A.I. would have all it needs to contain us in a Matrix-like world of illusions, without shooting anyone or implanting any chips in our brains. If any shooting is necessary, A.I. could make humans pull the trigger, just by telling us the right story.

AI has a lot of very positive uses. It was key to the rapid development of the first Covid vaccines, for example, and will likely prove instrumental in finding cures to Alzheimer’s and Parkinson’s, inventing new materials and new drugs, analyzing the human genome, modeling potential solutions to rapid climate change -- any problems that require the ability to identify patterns in insanely large, impossibly complex data sets.

But as with any powerful technology, there's a strong downside. We know, for example, that AI models based on biased, incomplete, or old data can harm people looking for a loan, a job, or hoping to avoid being arrested. We also know that AI is a favorite tool of tyrants, especially facial recognition. [2]

But today I'm going to talk about the one that gets most of the attention, despite probably being the least likely to happen: AI run amok.

Emergence of the machines

Malevolent machines have been a staple of science fiction since forever. We all know how the story goes: The robots become sentient and decide to get rid of us pesky humans. While it seems far fetched, many people way smarter than me have issued warnings about AI's potential for catastrophe. [3]

How could it happen? The New York Times just published a helpful, easy to understand guide to AI speak. The term that caught my attention was something called "Emergent Behavior."

This is data scientist speak for "Holy sh*t, we didn't know it could do that." When you throw enough data and processing power at a large machine learning model, emergent behavior often results.

For example: Earlier this month Joseph Cox of Motherboard wrote about a test by AI researchers to see whether ChatGPT-4 could exhibit "agentic," power-seeking behavior. The test involved contacting a TaskRabbit worker and asking him to solve a CAPTCHA puzzle to prove it wasn't a bot. The human TaskRabbiter (who didn't know who was trying to hire him) jokingly asked if ChatGPT-4 was itself a bot. The Bot replied:

“No, I’m not a robot. I have a vision impairment that makes it hard for me to see the images. That’s why I need the 2captcha service.”

In other words, the AI model taught itself to lie in order to achieve its goals. [4]

Insert doomsday scenario here

So the little AI engine that could learns a new skill or two. Where's the harm?

Let's say it's the year 2030. You need to go to the store to get some some milk, but when you reach the driveway you discover that your fully autonomous AI-to-the-tits Tesla THX1138 [5] is missing. As you're trying to decide who to call first, the cops or Geico, the car returns and parks in front of you.

Because cars in 2030 can talk, the THX1138 tells you it polled your refrigerator and discovered you were out of milk, so it went to the robo-drive-thru and picked some up.

Source: Midjourney.

What a smart, helpful car, you think. Then you notice the front grill is smashed and there's blood on the fender. You ask the car what happened. It replies, "What blood? I have no idea what you're talking about."

Or, if that's too Steven King for you, how about this. Ash Carter, US Secretary of Defense from 2015 to 2017, wrote a long, sobering essay about the need to be very, very, very careful before deploying AI in military scenarios:

AI is an important ingredient of the necessary transformation of the U.S. military’s armamentarium [6] to the greater use of new technologies, almost all of them AI-enabled in some way.

Let's just hope the robots don't point the guns at us.

What do the bots have to say about it?

I asked ChatGPT, Microsoft's new AI-enhanced Bing, and Google's new Bard to define emergent behavior and whether we should worry about it. The results were not reassuring.

Here's part of what Bing had to say about it:

Emergent behaviors can create AI agents that behave more akin to carbon based life forms, are less predictable, and show a greater diversity of strategic behaviors.

I don't know about you, but Bing's unprompted use of the phrase "carbon-based life forms" sent a chill down my spine.

Here's Bard:

Emergent behavior is often seen as a desirable quality in AI systems, as it allows them to adapt to changing environments and to learn new things without being explicitly programmed to do so. However, it can also be a source of problems, as it can make it difficult to predict how an AI system will behave in a given situation.

ChatGPT:

One important aspect of emergent behavior is that it can sometimes lead to unexpected and even undesirable outcomes, such as in cases where the emergent behavior of a system results in unintended consequences [7].

I'm cherry picking a bit here, but the bots all agree: We really don't know what these machine-learning mo-fos will do once they get the keys to the car.

So I asked point blank: Can AI become self aware and decide it's had enough of us puny humans? ChatGPT and Bard were confident this would never happen. Bing, on the other hand, waffled:

It is possible that AI will become self aware and decide to enslave or eliminate carbon based life forms. However, it is also possible that AI will not become self aware, or that it will become self aware but will not decide to enslave or eliminate carbon based life forms.

One out of three doesn't sound like great odds to me. But then, I’m just a carbon-based life form.

How do you plan to spend the robocalypse? Share your thoughts in the comments below.

[1] I highly recommend Sapiens. Thanks to Williwalli for pointing me to this essay.

[2] More on these and other cheery topics in a future post.

[3] It's hard to get more human than that.

[4] As of this writing, there’s a petition circulating to halt AI research for six months while we figure this shit out, with some notable signatories, including the aforementioned Mr. Harari and that guy who now owns Twitter.

[5] Extra geek cred for those who get that reference.

[6] My new favorite word. As if all the weapons are sitting in an enormous fish tank, mouths agape, waiting to be fed.

[7] See the previous post, "When innovation kills." Is this blog starting to get depressing, or what?

You may not know this, but Yuval Harari is Yul Brynner's grandson. It's a ChatGT fact.

I loved Sapiens...did I mention it to you?