NY Times to OpenAI: U Can't Touch This

What do hip-hop music and generative AI have in common? More than you might think.

MC Hammerbot. He’s dope on the floor, magic on the mic. Source: Midjourney.

Late last month, the New York Times filed a suit against OpenAI and Microsoft, accusing the $80 billion startup (and its deep-pocketed benevolent overlord) of violating its copyrights. Apparently, OpenAI used the Gray Lady's content to train ChatGPT, which resulted in everyone's favorite gen AI model spitting out Times articles verbatim in response to certain prompts.

As I've noted previously, this suit has the potential to bring a lot of gen AI companies to their knees, requiring them to either throw out their existing models and retrain them using non-copyrighted material, or pay all the folks whose copyrighted material they used.

ICYMI: Will the last artist left on Earth please turn out the lights?

But what it really reminds me of is hip-hop and the controversy over music sampling. Today, I am constantly hearing snippets of old songs woven into the fabric of new ones. But 30 years ago, this was a Big Friggin' Deal. According to a history of music sampling, found on the Redbull [1] website:

Many hip-hop producers took samples on a “use first, worry later” basis, and this wasn't without its problems. Beastie Boys are still paying for their 1989 homage to ‘70s funk, Paul’s Boutique. A relative flop at the time, the album has become a blueprint for creative sampling, containing anything from 100 to 300 musical excerpts. In July, 2019, the album’s recording engineer, Mario Caldato Jr, recalled how over US$250,000 was spent on legal clearances, and that “the list of samples on the album is so long – they [Paul’s Boutique producers The Dust Brothers] are still getting sued over it.”

Eventually, the recording industry came up with a two-step solution to this: Step 1: Ask permission. Step 2: Pay Da Man.

For example: MC Hammer's "U Can't Touch This," which essentially added new lyrics, rapping, and some jiggy parachute-pants dance moves over the iconic bass line of Rick James' "Superfreak," was the subject of a lawsuit by James for copyright infringement. They settled it by giving James songwriting credit — and a healthy chunk of the massive royalties that song produced. [2]

In my house, it's always Hammer Time — parachute pants optional.

Transform this, m*****f****r.

"Use first, worry later" is a good description of how OpenAI (and other LLM creators) apparently went about training their models. They are leaning very heavily on a Fair Use argument, basically saying that a) the material was available on the public Internet, and b) the act of using it to train their AI models was transformative, "using the source work in completely new or unexpected ways."

You know, like Andy Warhol and Campbell's Soup cans. Or just about everything Weird Al Yankovic does.

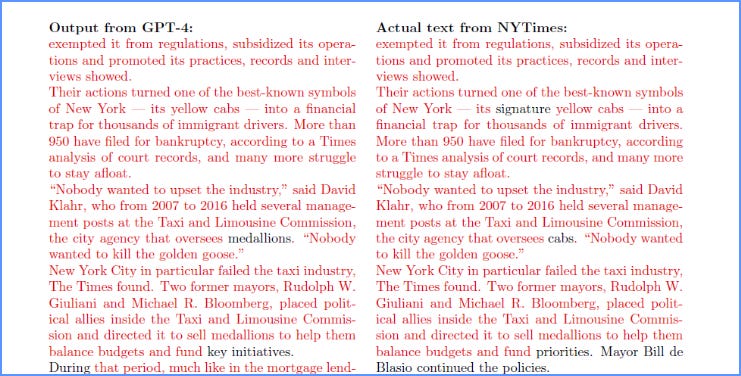

The problem? ChatGPT proved to be more regurgitive than transformative. In its complaint, the Times noted that ChatGPT quoted nearly verbatim from several of its stories. Like this one, for example:

OpenAI published a public response to the charges in the suit this week, and it's pretty weak sauce. It boils down to: We love newspapers, training is fair use, you can opt out if you want [3], regurgitation is a "rare bug" they are attempting to swat, and the Times cheated in producing examples of stolen content. Per the blog post:

Interestingly, the regurgitations The New York Times induced appear to be from years-old articles that have proliferated on multiple third-party websites. It seems they intentionally manipulated prompts, often including lengthy excerpts of articles, in order to get our model to regurgitate. Even when using such prompts, our models don’t typically behave the way The New York Times insinuates, which suggests they either instructed the model to regurgitate or cherry-picked their examples from many attempts.

So to recap this argument: Our AI model doesn't regurgitate, well it does sometimes but that's a mistake, and in any case it was only repeating material someone else stole from the Times site, and the stuff was really old anyway, and our model doesn't act the way the Times says it does despite the fact it did exactly that, and liar liar pants on fire.

At the same time, though, the Times and OpenAI were actually negotiating about compensating the Gray Lady for using 'her' articles. Maybe the lawsuit is an attempt to get them to add a few zeroes to their offer? I guess we’ll find out.

Paper cuts

The Times' main complaint isn't actually about stealing. It's about competition. The paper is worried that ChatGPT and its robot cousins will steal readers from it, using the Times' own reporting. And they should be. This is exactly what happened when newspapers first started putting out digital editions in the early 2000s.

A news outlet would break a story, and within minutes hundreds of second and third tier "news" sites would run their own version of the story, cribbed directly from the original, and often without any acknowledgement of where they got it. And they would reap the benefits of any web traffic Google sent their way. [4] That's just one of many reasons why newspapers are almost extinct, but it's a big one.

ICYMI: All the news that’s fit… for robots?

If you could just ask ChatGPT or some other gen AI model, "What's the news today?" and it answered, using material it scraped from the organization that paid actual reporters actual money to generate it, why would you ever pay for a digital subscription?

The Times wants its cut. So will thousands of other publications, writers, singers, and artists whose work has been used to teach robots. The solution to all of this? Pay Da Man.

Break it down!

Who’s your favorite OG rap master? Represent in the comments below.

[1] Go figure. Apparently music also gives you wings.

[2] Hammer apparently made about $33 million on that song in 1990 ($70 million today), and promptly spent all of it. Per American Songwriter: "In October 1990, MC Hammer paid $5 million for a 12.5-acre property in Fremont... Hammer demolished the property’s 11,000 square-foot mansion and replaced it with a 40,000 square-foot custom-made home that included a bowling alley, marble floors, two swimming pools, multiple tennis courts, a recording studio, four dishwashers, a rehearsal hall, 17-car garage, and a baseball field."

[3] A bit too late for any existing models though, eh? Kind of like when you go out of town for the weekend and your teenagers have a party and trash your house, then when you get home and survey the damage they promise to never do it again if you ask nicely.

[4] I call them "repeaters, not reporters." And yes, it's happened to me, too.

I can hardly wait for us to all be reading AI hallucinations we think are NYT articles by bots we think are reporters about disinformation we think is news. Or wait, what? ...aren't we all doing that already?