Garbage garbage everywhere, & soon it starts to stink

What data went into training that ginormous AI language model? A dumpster full of webtrash.

Dumpster diving robot. Source: Midjourney.

There's an old saying in the computer biz: Garbage in, garbage out. It means that if you feed crappy data into any kind of software, it will inevitably spit out crappy results. [1]

This ironclad rule has a direct application to the large language models that fuel the current tsunami of generative AI tools like ChatGPT, Google's BARD, Bing Chat, et al, that we are suddenly awash in.

These tools were created by absorbing an enormous amount of data and using it to train machine learning models. And where did this data come from? The Internet, in all its glorious awfulness. And that turns out to be a wee bit problematic.

Scraping the bottom of the barrel

The people who build these models do this in large part by 'scraping' data from websites. What does that mean, you might ask?

Imagine someone wandering down your street in the predawn hours, before your local waste management truck rolls by to empty the trash bins. This person dumps your bins onto the sidewalk, spreads out the trash, takes photos of everything, stuffs it all back in the bin, then moves to the next house on the block and does it all over again.

You might not care; it's trash, after all. Or you might care a lot; we tend to put some very personal and potentially embarrassing things in our trash, which you might not want some random stranger pawing through. [2]

And of course, that stranger didn't ask you if it was OK to go through your trash. They just did it. This is not illegal by the way, but it is a bit rude. [3]

A deep dive into the dumpster

Most of the time, we don't even know what elements of webtrash have been used to teach chatbots to mimic actual humans. But in one particular case we do, thanks to a report published earlier this week by the Washington Post.

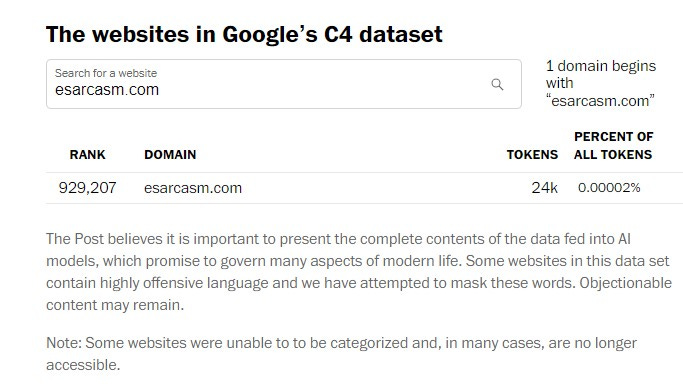

The Post partnered with AI researchers to do a deep dive into Google's C4 dataset, which has been used to train large language models used by Google and Facebook. [4] Information from 15 million websites went into training this model, which is still less than the amount of data used to train other models.

It then ranked the websites that were used most often, across a number of categories (news and media, arts & entertainment, technology, business and industrial, etc).

It's not all trash, of course. The top sources it used were Google's patent database, Wikipedia [5], and Scribd. News sources (like pbs.org), business sites (fool.com), travel (booking.com), education (coursera.org) all were highly ranked.

But the choices were often odd, to say the least. The Post story included a helpful search engine you can use to see what else is in that trove of 15 million sites. For example, it drew from nearly 1,500 sites with the word 'porn' in the URL. More than 5,000 sites contained "Fox," many of them associated with the alleged news network. Foreign and domestic propaganda sites and white supremacy outlets were among the more commonly used sites. Qanon made a token appearance, as did 4chan, Stormfront, Pastebin, and CardingForums. [6]

Weirdest of all, a geek humor site I created with fellow digital reprobate JR Raphael [7], and then abandoned two years later, is in there.

Hey, we made it into the top 1 million! Woot!

We last published something on eSarcasm.com back in 2011, I believe. Pretty sure we took it offline for good in 2015 or 2016. That gives you an idea of how old the data in that language model is, as well as how desperate the researchers must have been for content to scrape.

Mystery train

Also included in that trove of scraped material: personal blogs, creative portfolios, voter registration records, and a lot of copyrighted material -- researchers counted more than 200 million copyright symbols © in that tranche of data.

There has been a strong pushback against AI researchers using copyrighted materials to build their Frankenbots. The artists whose work was used to train image generators like DALL-E or Midjourney without a) permission, or b) compensation have a right to be peeved — especially if these tools start to put them out of a job. Several have filed infringement lawsuits against Microsoft, OpenAI, and other builders of machine learning models.

Reddit, the massive online message board community, has announced that it will no longer tolerate any more free data scraping by AI researchers. If you want to build the next life-altering chatbot by eavesdropping on conversations in r/longfurbies or r/breadstapledtotrees, you're gonna have to pony up.

Pink is the new black. Source: TheNextWeb by way of Reddit

What's in the bag?

Occasionally, large language models like ChatGPT just make shit up. Researchers typically attribute that to AI "hallucinations" — they're programmed to respond to prompts, not to fact check themselves. But I wonder if they might also be regurgitating things they "learned" from less- than-reputable websites.

I can understand the argument that, for an AI model to truly understand how humans communicate, it needs to embrace all forms — the good, the bad, the ugly, and the Internet. (Imagine the faux uproar that would be generated if — heaven forfend! — you attempted to use a 'woke' training dataset.)

But before we let these things loose to make decisions about our lives, or replace human-generated content, we need to know what went into their creation -- how they were raised and what they were taught along the way.

Because we all already have enough garbage in our lives.

How would you feel if your personal information was used to train an AI bot without your knowledge or permission? Post your thoughts in the comments below.

[1] Or, to use another metaphor, you can't make Chicken Kiev from chicken scat. Something I try to explain to my marketing clients, usually without much success.

[2] For example: empty bottles of booze, used pregnancy tests, dogeared copies of JUGS magazine, AOL discs, etc.

[3] In California v. Greenwood (1988), SCOTUS ruled that people do not have a reasonable expectation of privacy when it comes to trash. That's why reporters chasing a story will sometimes go dumpster diving outside the houses of the people or companies they're investigating. (And you think this job is easy.) By extension, if you publish something on the web, you've made it available to the public to consume -- and that 'public' includes machines.

[4] C4 stands for Colossal Clean Crawled Corpus, and if that sounds to you like something decayed and wriggling with maggots, well you wouldn't be the only one.

[5] I have given Wikipedia a fair share of grief over the years as "the encyclopedia that any idiot can edit, and many do." I have to admit it's gotten a lot better of late in terms of policing inaccurate content, though it's far from perfect. But that changes from day to day, even hour to hour. So the question then becomes, what parts of Wikipedia did researchers scrape, and when did they do it?

[6] Respectively, havens for conspiracy nutbaskets, misogynists+pedophiles, Nazis, black-hat hackers, and credit card thieves.

[7] Benevolent overlord of the Android Intel newsletter, which you should definitely subscribe to if you own one of those phones. Tell him I sent ya.

Why ya gotta hate on AOL...? But seriously, garbage in/garbage out is such a truism...but I think, the point about old web sites may not be as objective -- whose to say if LIFO or FIFO is the best approach? Feel like it can't be binary...it has to be considered based on what changes over time.

For instance a website/page describing COVID symptoms and/or sources in 2019 vs. one in 2023 will be very different and have quite distinct value as fodder for AI generated blather...