Can you still believe your eyes & ears? Probably not.

Most of us grew up assuming media was genuine until proven otherwise. AI fakes have changed that assumption, forever.

Not the actual Nina Schick, but an amazing recreation. Source: YouTube.

This is the 16th chapter (episode?) of Cranky Old Man Yells at Internet, and 14 of them have been about artificial intelligence in one form or another. Being 'All AI, All The Time' is not really the intent of this thing, as much as it has one; I plan to cover other topics as well... eventually. It's just that there is so much happening in the space right now I could probably write about it every day. (Yes, there's a ton of hype, but this really is a major shift in how we interact with technology, and how it interacts with us, whether we want it to or not.)

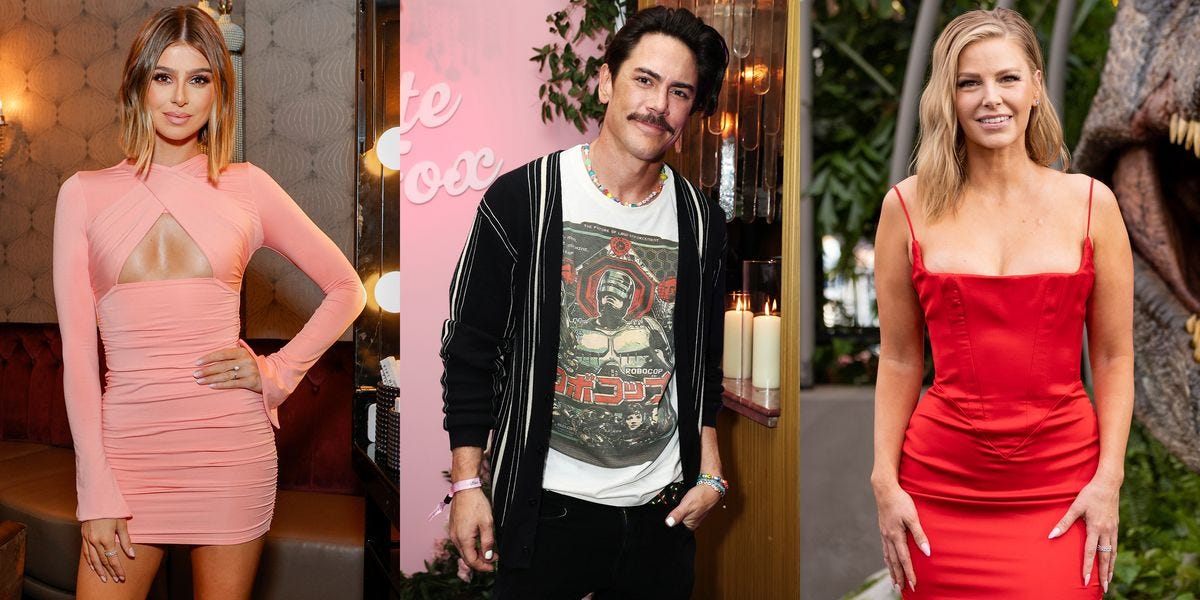

If you have no interest whatsoever in AI, that's fine, I won't mock you. [1] You're a busy person with better things to worry about -- raging pandemics, melting glaciers, killer tornadoes, the mushroom zombie apocalypse [2], the protracted death of democracy, and how that total douche-nozzle Tom could ditch sweet Ariana for that slut Raquel.

The mustache alone should have been enough of a clue. Source: Getty Images.

I get it. Just remember, if you wake up five years from now and wonder, Where the hell did all these robots come from? Or, How did that asshole manage to elected? Don't say I didn't warn ya.

Trust never sleeps

Last time out I wrote that at some point we may all be asked to provide "proof of personhood" to verify that we are in fact humans. Long before that happens, however, we'll need to deal with a more immediate and difficult problem: determining the veracity of visual and audio media.

Like many otherwise jaundiced consumers of media, I too got fooled by the photo of the Pope in a puffy coat. It was "drip" as the kids say. [3]

Who wore it better? Sources: Twitter, University of Michigan.

Then there's a seemingly endless number of President Biden audio fakes, like this one:

Deepfakes have gotten so good, so quickly, that we've all been caught a little bit off guard. But not all of them. For example:

No, the former guy was not joined by an army of zombies marching up Fifth Avenue in Manhattan. That's an easy fake to spot, in part because, as Stephen Colbert said, "He's actually walking." Another clue: the mangled faces of the people behind him. (They probably used Gabby, the racist frog AI image generator.)

Bottom line: We need to change our approach to media. We have to stop trying to determine whether an image or video or audio is fake, and demand proof that it is real. It's the opposite of "Trust But Verify," the approach adopted by the US and USSR for checking compliance with nuclear arms treaties (also an effective way to parent teenagers [4]).

We need to shift to DON'T trust UNTIL you verify.

Look for AI AI AI on the label label label

Probably the best way to make this a standard part of how we consume media is for the creators of said media to watermark their work using cryptography. Nina Schick [5], an AI researcher who wrote a book about deepfakes three years ago and has her own, fascinating substack newsletter, recently collaborated with companies Revel.ai and Truepic on a deep fake of herself. What makes this fake unique is that it carries a cryptographic watermark that identifies it as such.

By assigning media a randomly generated number (cryptography) and storing that number in a distributed database (blockchain), creators can certify the authenticity of what they've made. If the image (video, audio) is altered in some way, that information will also be indicated on the blockchain. [6]

The same technology can be used to identify non-AI-generated media as well. A mobile camera app that uses Truepic can show you when it was taken, where it was taken, what device was used, and when it was certified as the real McCoy. The technology is already being used to validate insurance claims and real estate appraisals, among other boring-but-necessary applications. I think it, or something like it, will become a standard part of how media is shared.

Anyone who's seen the real Nina Schick in action knows that deepfake video is highly imperfect. The real Nina is warmer, more human, more charming, more.... everything [7]. But it's an interesting demonstration of what's possible, both in terms of fakery and authenticity.

There's a reason she subtitled her book on deepfakes "The coming infocalypse." We need better weapons to fight this war. Our eyes and ears are no longer enough.

Have you been fooled by a fake? Fess up in the comments below.

[1] Well, maybe a little.

[2] If you haven't watched "The Last of Us" yet, you should. Do it now; I'll wait.

[3] "Kids" defined here as anyone under the age of 47.

[4] "Yes, I totally believe that you finished your homework before launching that video game, but show it to me anyway. Oh, the file got corrupted? How conveeeenient."

[5] Sigh.

[6] I will explain in greater detail how all this works in a future post, once I fully understand it myself.

[7] Swoon.

Thoughts on this? https://time.com/6268843/ai-risks-democracy-technology/